Could we build AI that is fundamentally designed to be truthful and consistent, not because it's programmed with specific facts, but because its underlying language makes paradoxes or self-contradictions impossible?

Introduction: The Problem with Trusting AI

Imagine you're on trial for a crime you didn’t commit, and the key evidence against you comes from an AI model. This AI is supposed to analyze footage, interpret legal texts, and present an unbiased judgment. But what if it hallucinated a fact? Misinterpreted the law? Or simply contradicted itself across different parts of its analysis—without anyone realizing?

This isn’t a sci-fi scenario. AI is already used to assist legal sentencing, make medical diagnoses, and filter financial transactions for fraud. And in each of these cases, an error is not just a bug—it’s a potential catastrophe.

Consider these real-world and emerging cases:

Medical AI Misdiagnosis: In 2021, an AI model deployed to diagnose skin cancer showed promising accuracy—until it was discovered that it had learned to associate cancerous lesions with surgical skin markers often present in the training photos. In other words, the AI didn’t learn to recognize cancer; it learned to spot the pen marks that doctors draw when they suspect cancer.

Contradictory Legal Reasoning: A generative AI legal assistant confidently wrote a court brief citing six relevant cases… none of which existed. The citations were hallucinated. The lawyer who submitted it, relying on the tool, faced professional sanctions.

Misinformation in LLMs: When asked about sensitive historical or political events, large language models often generate contradictory answers depending on how the question is phrased. A model might affirm an event happened in one sentence and deny it in another—undetectable unless someone is specifically looking.

These failures are symptoms of a deeper issue: today’s AI systems are not grounded in formal logic or internal consistency. They're statistical machines that predict the next token in a sentence. There’s no built-in concept of truth, contradiction, or safety. They can, and often do, lie—not maliciously, but mechanically.

Now imagine a nuclear power plant whose safety mechanisms are controlled by adaptive AI. Or a fully autonomous weapon system guided by real-time reasoning. Or even just a social media feed tailored by AI agents reasoning over your preferences, nudging your beliefs in subtle but impactful ways.

In such high-stakes domains, “maybe it’s wrong” is not good enough.

We need AI that:

- Cannot hallucinate.

- Cannot contradict itself.

- Cannot update itself into a dangerous state.

Not because we programmed it with a list of safety rules, but because the very language it thinks in makes self-contradiction and unsafe behavior logically impossible.

That is the dream of provably honest AI. And it starts not with data or training—but with logic, language, and a radical rethinking of how we build intelligent systems.

The Roots of the Problem: Language, Logic, and Paradox

Traditional programming and logic systems struggle with self-reference. The most famous result here is Tarski’s Undefinability Theorem, which says that in any sufficiently rich logical system, you can't define the truth of its own statements without risking contradiction.

That’s a big barrier to building AI that reasons about its own behavior, correctness, or updates.

A Breakthrough: Formal Languages Without Paradox

What if we could escape this limitation?

This is where the Tau Language and its extensions (NSO and GS) enter the picture. These are not just programming languages or logical systems—they are decidable formal systems capable of reasoning about their own sentences without paradox.

How?

Abstracting Sentences as Boolean Elements

Instead of referring to the syntactic structure of statements (which leads to paradox), Tau abstracts sentences into Boolean algebra elements, focusing only on their meaning (truth values under logical equivalence).Atomless Boolean Algebras

By working within certain kinds of infinite, well-behaved algebraic structures, contradictions are avoided while maintaining expressive power.Temporal Reasoning

With GS (Guarded Successor), Tau systems can reason not only about current statements but about sequences of changes over time—enabling safe updates and self-modification without breaking previous truths.

This means the AI can say:

I will only accept an update to my behavior if it logically follows from my current rules and doesn't contradict my existing commitments.

This is enforced not by external checking, but by the AI's native language semantics.

Practical Implementation: Honest AI by Construction

In Tau's system, software is correct-by-construction. Users provide requirements (in formal controlled natural language or logic), and these are turned directly into executable software or updates that are guaranteed to match the specification—provably, mathematically.

Let’s say you specify:

"Never share private user data."

Tau can:

- Accept future updates only if they continue to imply this constraint.

- Reject updates that contradict this rule, even if they come from the system itself.

- Explain why an update was rejected, using its own reasoning capabilities.

This is what’s described in Tau’s patents and architecture:

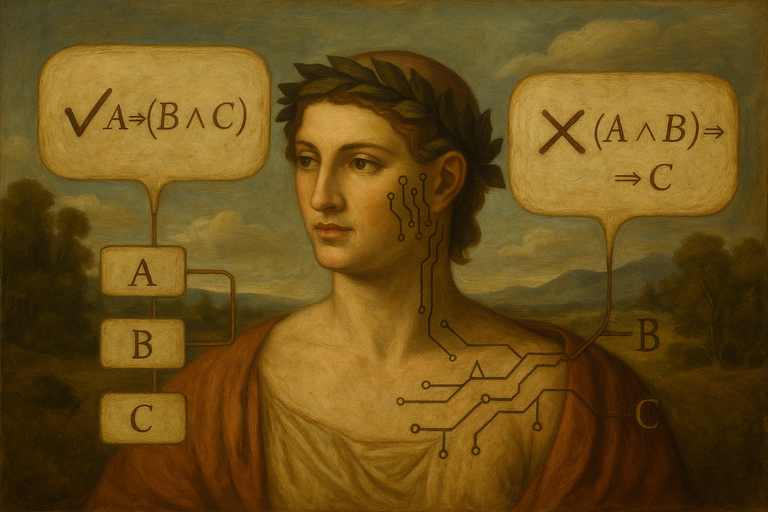

An update u is accepted only if u ⇒ c (i.e., logically implies the constraint c).

If not, it’s provably rejected.

Why This Is Revolutionary

This capability shifts the paradigm from AI alignment through statistical behavior to AI alignment through logic. It’s the difference between:

- A child who’s told to “be good,” and

- A machine that literally cannot behave badly without violating its own provable nature.

It brings the possibility of:

- Self-updating AI that always remains within safe, agreed boundaries.

- Legal and regulatory systems where the rules are always followed—not just in principle but in practice.

- Contracts, governance, and collaboration systems that never breach consensus unless explicitly agreed.

Trustworthy AI Is a Language Problem

This leads us to a profound insight:

The trustworthiness of AI is a language design problem.

If the language of the AI can model itself, detect contradictions, and enforce rules as part of its logic, then we don't have to rely on post-hoc ethics patches or behavioral nudges.

With Tau Language and its supporting infrastructure, we get for the first time:

- AI that cannot hallucinate because it computes only what's provable.

- AI that cannot contradict itself because contradictions are detectably invalid.

- AI that cannot perform unsafe updates, because unsafe behavior isn't even expressible within the accepted structure.

Conclusion: The Honest Future

The dream of AI that can’t lie is no longer a fantasy.

It's a design choice—grounded in formal logic, implemented through breakthrough language constructs, and made real in systems like Tau.

Building AI on this foundation means we’re no longer just hoping the system behaves well.

We’re designing systems where bad behavior is logically impossible.

This is the future of provably safe, honest, and aligned artificial intelligence.

Here's a companion page to better understand the subject matter: https://claude.ai/public/artifacts/1fd23e5a-a37e-40f9-bd2e-275aca95cf6a

More info @ https://tau.net and https://tau.ai

Is there an AI model being built using tau, do you know? (i will follow link)

#hive #posh