Since my last update, I've been working on stabilizing the core HAF sync code so it can support synchronizing up to the current head block, in preparation for a public beta deployment. This involved numerous blockchain replays and HAF Plug & Play state replays, while tweaking the various SQL functions for optimization. I'm pleased to announce that I am now working on an official public beta release, to be expected next weekend. This coming week, I will be working with @brianoflondon to integrate Podping's data sets on the development server.

Core fixes

Pull request: https://github.com/imwatsi/haf-plug-play/pull/3

Transaction hash retrieval

The previous SQL code ran into performance issues when processing data later than 10,000,000 blocks. I discovered that the query used to retrieve transaction_id from the hive.plug_play_transactions_view view was slowing the whole process down. I changed how the core HAF sync script processes individual ops and now it fetches the transaction hashes one by one as the ops are being recorded in the main plug_play_ops table.

Batch processing

Previously, the core HAF sync code used to process the whole block range, regardless of how many blocks needed to be processed. I added batch processing features to divide the block range up in configurable batch sizes. Currently set to 1,000,000, the BATCH_PROCESS_SIZE allows the script to process batches of one million blocks per round.

This is useful when the script needs to be stopped, for debugging or other reasons. It will only have to discard data from the last one million blocks still being processed during the initial massive sync.

Status updates

The HAF sync process now keeps track of the latest block_number, timestamp and hive_rowid processed. This involved adding new SQL code to the main HAF sync process.

.png)

You can view the current sync state of HAF Plug & Play and it's plugs by either:

- visiting https://plug-play-beta.imwatsi.com/ or

- making this call on the API - https://github.com/imwatsi/haf-plug-play/blob/dev/docs/api/standard_endpoints.md#get_sync_status

Follow plug fixes

There was an issue with the core SQL function for updating follow state and its history, which caused very long delays. I fixed the code in this same pull request.

Reblog and community plugs and stability fixes

Pull request: https://github.com/imwatsi/haf-plug-play/pull/4

I finished work on the reblogplug and part of the community plugs. I'm adding new SQL code, endpoints and SQL queries to support more community ops. Only subscribe and unsubscribe ops are currently supported.

New feature

I am working on supporting the parsing of json data from posts or comments. This feature is in alpha stage. It will help apps that use json in posts and comments to populate the state of their various content related features.

Documentation

I prepared documentation for the following:

followplug (historical search and custom queries)reblogplug (historical search and custom queries)communityplug (subscribe and unsubscribe ops)

Find it here: https://github.com/imwatsi/haf-plug-play/blob/dev/docs/api/api.md

Public Beta Node

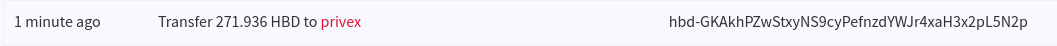

I purchased a dedicated server for the production node.

After the stabilization work I did recently, I have started preparing a release for the public beta node. I estimate that I will announce the release in my update post, next weekend.

That's it for this update!

I run a Hive witness node:

- Witness name:

imwatsi - HiveSigner

- Witness name: