The images formed by optical (and other imaging)

devices can be ‘caught’ and stored in a variety of ways, ranging from the

biochemical method using eyes and brain, through the traditional photochemical

processes of film and plate cameras, to the newer systems, which include video

cameras, charge-coupled devices (CCDs), magnetic discs and compact discs.

While the imaging process itself (using lenses and mirrors) depends on the wave aspect of light, image-capturing systems generally rely on the particle aspect of light. It is the energy of the photon that determines how the devices work and are designed.

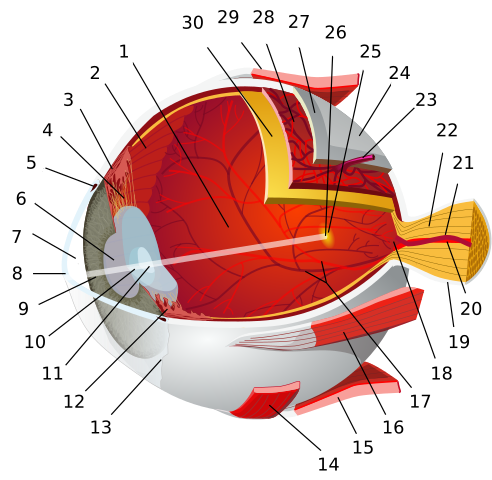

THE HUMAN EYE

FOCUSING:

Details of the eye are shown in the figure below. Light entering it is focused by two lenses. First, the light meets the cornea, a curved layer which is transparent and fairly hard. It acts as a fixed focus lens and in fact, does most of the refracting of the light entering the eye. Then the light reaches the lens. It refracts the light only slightly, mainly because it is surrounded by substances of almost the same refractive index as itself, and so the refraction (change in speed and direction) of light at the lens surfaces is quite small.

The job of the lens is to adjust the focal length of the cornea-lens combination, allowing for a range of object distances between about 25 cm at the near point and infinity at the far point for the normal eye. The focal length of the eye lens is adjusted by changing its shape: the radii of curvature of its surfaces are changed by a ring of muscle round the lens called the ciliary muscle, which alters the shape of the lens.

When the eye is relaxed, the radial suspensory ligament keeps the lens in tension. The eye forms clear images of objects at the far point (infinity, rays parallel), and the focal length of the cornea-lens combination is the length of the eye – about 17 mm. When the eye looks at a near object (rays diverging), the ciliary muscle contracts, the lens becomes fatter, and the focal length is shortened, in order to refract the rays to form a focused image on the retina. Rapid change in lens shape relies on swift feedback between brain and eye and is called accommodation.

SENSING:

The quantity of light entering the eye depends on the size of the pupil, the hole in the iris. Its size is controlled by the muscles of the iris. The light travels on to reach the retina which contains two types of light-sensitive cells, called rods and cones because of their shape. It is the functioning of these cells that enables us to ‘see’. Briefly, this is the mechanism. The rods and cones contain light-sensitive pigment molecules. Photons of particular energies (frequencies) reach the pigment molecules. These molecules absorb energy and a reversible photochemical reaction is triggered. The molecules then rapidly lose energy in a process that generates an electrical potential, and a signal is transmitted to the brain via the optic nerve.

The fovea is the point on the retina directly in line with the lens. The fovea is where an image is resolved in the finest detail and is the part of the retina most crowded with cones. The rods are more numerous away from the fovea. The average human eye contains about 125 billion rods but only 7 billion cones.

Rods can ‘distinguish’ between different intensities of light, but not between light of different frequencies (photon energies). They are more sensitive at low light intensities and are particularly effective for seeing in the dark. Since there are more of them away from the centre of the retina, at night, faint objects are most easily seen ‘out of the corner of the eye’. If we suddenly move from bright light to darkness, or a light is switched off, it takes our eyes time to adjust to the dark and make out our surroundings. In this time, the photochemical pigment in the rods, which was broken down by bright light, is gradually resynthesised. When the retina is again sensitive to dim light, we say it is dark-adapted.

Cones allow us to see colour. Theories of colour vision vary, but it is generally accepted that there are three types of cones which are sensitive to overlapping regions of the spectrum, centring on the red, blue and green wavelength.

It is thought that the enormous range of colours we can see around us is due to different combinations of the three types of cones being stimulated, and to the way the brain interprets the signals from them. Red, blue and green are the primary colours of optics, and any pair of these colours produces the secondary colours:

- red + green (or minus blue) produce the sensation of yellow.

- red + blue (or minus green) produce the sensation of magenta.

- green + blue (or minus red) produce the sensation of cyan.

When all three types are strongly stimulated by the energy range of photons found in daylight, the brain interprets the signals as ‘white’. (In reality, colour vision is much more complicated than this simple description suggests.)

DEFECTS OF THE EYE

The near point is the closest distance at which we can see objects clearly, and this depends largely on the strength or weakness of the ciliary muscles. The distance of the near point increases with age: at 10 years, it is about 18 cm; in a young adult, 25 cm; and by the age of 60, it may be as much as 5 metres.

This age-related defect is called far sight or hypermetropia. The eye lens is too weak, so that near objects are blurred because their images are formed behind the retina. The figure below illustrates these defects and how spectacles with positive lenses can correct them.

Another common defect is short sight or myopia. In this case, the eye’s ciliary muscles are too strong and, even when relaxed, the lens is too powerful. This means that distant objects are focused in front of the retina, Negative lenses correct this defect, as shown. The lens in the ageing eye tends to get less and less flexible so that it becomes practically a ‘fixed focus’ device. Then, a person may need two pairs of spectacles – one for seeing close objects clearly (reading glasses’, with positive lenses) and one for seeing distant objects clearly (with negative lenses or weaker positive ones). Some people wear bifocals, spectacles with lenses that have a ‘long-distance’ part and a ‘reading’ part, or varifocals with gradual variation from top to bottom.

At any age, the eye lens may have a condition called astigmatism. The eye cannot focus at the same time on vertical lines and horizontal ones, and a point source tends to be seen as a line. This is because the lens is not spherical and, for example, the radius of curvature in the horizontal direction is not equal to the radius in the vertical direction. Astigmatism is corrected by lenses with curvatures that counterbalance the eye lens curvatures.

DIGITAL CAMERAS

Digital cameras use charge-coupled devices, known as CCDs. CCDs are also used as the sensors which capture the images in astronomical telescopes. A CCD consists of a large number of very small picture element detectors, called pixels. A good digital camera will have a CCD with an array perhaps 16 million pixels, each a tiny electrode built on to a thin layer of silicon.

When a photon hits the silicon layer, an electron-hole pair is created. A pixel electrode collects the charge produced, which depends upon the intensity of the light falling on the silicon. After a brief time, the electrodes are made to release the charge they have collected in a programmed sequence as the pixels are scanned electronically. The scan produces a digital signal consisting of a linear sequence of numbers, which can be stored in a small magnetic memory device, This is later transferred to a computer which can display the image on a screen and transfer it to a colour printer, or send it across the internet.

Digital ‘movie films’ can be taken similarly by camcorders and stored on tape or on a disc, but larger memories are needed. Colour pictures require three sets of sensitive areas with colour filters (red, green, blue) which produce a more complicated output signal containing colour as well as information.

CCDs are sensitive to light of wavelengths from 400 nm (violet) to 1000 nm (infrared). Their main advantage in both scientific and everyday applications is their efficiency. Photographic film uses less than 4 per cent of the photons reaching it. The ‘wasted’ photons simply miss the sensitive grains in the film, or fail to trigger a chemical change. CCDs can make use of about 70 per cent of the incident photons, partly because they cover a larger area but mainly because the pixels are more sensitive than the chemicals in a film. (The eye is only about 1 per cent efficient at sensing photons.)

In astronomy, the collection time can be made very much longer than in a TV camera, allowing detail in a faint object to build up. Even so, the detection efficiency of CCDs means that the time of exposure is much less than is needed for a chemical photographic image. Also, the output current is proportional to the number of photons arriving, which means that the intensity of spectral lines and the brightness of stars can be measured more accurately than is possible with photographic methods.

Photographic plates do have the advantage that the light-sensitive grains are smaller than CCD pixels, so they can produce images with better resolution. But CCDs can be ‘butted’ together to make larger image areas. CCD technology is developing rapidly and may well take over from chemical photography as LCD display screens take over from cathode ray tube displays.

Scanners are now commonly used to convert a photograph into a stream of data for storage in a computer. They work by digitising the photograph’s continuously varying light intensities and colour.

DISPLAY SCREENS

We already see a great deal of the world on our TV screens and developments in information technology have made everyday communication through electronic mailing systems more common than the printed word. There are two main kinds of video screen used to display the information: the cathode ray tube (CRT), with a phosphor screen which is made to glow by high speed electrons, and the liquid crystal display (LCD), where the image is produced by a scanning process.

The cathode-ray tube

Cathode ray tubes (CRTs) with phosphor screens are the oldest type: ‘cathode rays’ was the name given to streams of what were later discovered to be electrons. They were emitted from the negative electrode (cathode) of a high voltage discharge tube. Nowadays, electrons are produced by heating a metal oxide. Energy transferred from the electrons as they reach the screen makes the phosphor coating emit light.

CRTs are not only the most common tubes for TV receivers, radar devices and computer monitors but are also in such useful laboratory equipment as cathode ray oscilloscopes. CRTs waste energy by having to heat the electron emitter, and they need high voltages (over 2 kV) to accelerate the electrons to energies capable of exciting the phosphor. However, the technology is well established and CRTS are gradually going out of vogue.

A 14-inch cathode-ray tube showing its deflection coils and electron guns. Blue tooth7 CC BY-SA 3.0

Liquid crystal display screens

Many portable devices, such as calculators, watches and laptop computers, use liquid crystal displays (LCDs). The principle behind the image production is that the crystal is able to polarise light, and so can cut out light polarised in certain directions. This polarising effect in liquid crystals can be switched on and off by applying an electric field to it. In an LCD screen an array of small liquid crystal picture elements (pixels) is back-lit by a bright screen. A pixel can be changed from bright to dark electronically, and the array of pixels can be controlled by a data signal just like the screen of the cathode ray tube in a TV set.

Each pixel is connected individually to a thin film transistor (TFT), many thousands of these making up a large single block – a kind of microchip. This individual control of each pixel means that the pixels can be brighter, and the brightness can also be changed faster so that the screen is ‘refreshed’ more often. As these types of screen become cheaper and larger, they are already used in replace of CRTs for computer monitors, and are taking over for television screens.

BEYOND THE VISIBLE SPECTRUM

An image is a record of information. Increasingly. The information is being coded digitally. The object used as the basis of an image need not be visible to the eye, that is, it need not be a source or reflector of visible light waves. The whole range of radiation in the electromagnetic spectrum may be used to make an image. For example. X-rays, ultraviolet and infrared have been used for a long time to make visible images on photographic film. Images of soil and even the depths of the sea can be made using radar of different wavelengths. Images are now also made using sound, electrons (as waves), and even changes in the tiny magnetic fields of spinning nuclei.

Ultrasound imaging

Ultrasound scanners are now commonly used in medicine to investigate and diagnose disorders. Ultrasound consists of sound waves of very high frequency and correspondingly small wavelength. Body tissues of different shapes absorb and reflect these sound waves to varying degrees, and images can be made of organs deep within the body. Remember that sound is not part of the electromagnetic spectrum.

Magnetic resonance imaging

Hospitals commonly run appeals to raise funds for a ‘scanner’. This is a device that can probe body systems in even more detail than ultrasound, using a technique that relies on the magnetic property of atomic nuclei and is known as magnetic resonance imaging, or MRI. The low-intensity electromagnetic waves used (at radio frequency) do not affect biological tissues.

IMAGING THE ULTRA-SMALL

The transmission electron microscope

Quantum physics produced the greatest surprise of all when it suggested that, just as light waves have a particle aspect, so material particles should have a wave aspect. The realisation that electrons – as waves – could be used to produce images in much the same way that light waves do, led to the development of the electron microscope.

In an electron microscope, an electron beam is projected through a series of electromagnets shaped in a ring which act as ‘lenses’ to change the direction of the beam. The first lens is a condenser lens. This lens may be used to make the beam parallel or to focus on the target (the object being studied) to increase the ‘illumination’. The second magnetic ring acts as the objective lens and forms a magnified image of the target. The third electromagnet acts rather like the eyepiece on a light microscope, magnifying the image further and forming an image on a screen or on photographic film.

The problem with making images of any kind with waves is that of diffraction. Diffraction affects the resolution of an image, that is, how far apart objects or details in an object must be before they can be seen as separate in an image. The limit of resolution for a microscope or telescope is defined by the Rayleigh criterion.

The clarity of the image is determined by the wavelength – the shorter the better. Blue light has the shortest wavelength in the visible range, though ultraviolet may be used with photomicrography. The visible wavelengths are of the order of a few hundred nanometres – about 3 to 5 × 10-7 m.

In an electron microscope, electrons are accelerated to a high speed before hitting the object being studied. The higher the speed, the shorter the wavelength, and so the better the resolution. The wavelength of an electron in an electron microscope is typically about 10-11 m – about 10 000 times shorter than the wavelength of light, and therefore the resolution is 10000 times better. But as with light microscopes there are aberrations (distortions). The basic ones are:

- the magnetic field is not absolutely accurately defined (i.e. the lens has defects),

- electrons tend to repel each other and so their paths deviate to produce imperfections in the final image,

- as electrons pass through the target they slow down – and so are not focused to the right spot in the final image.

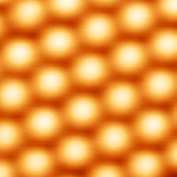

The scanning tunnelling microscope

The image in the figure below was produced by a scanning tunnelling microscope. It shows the actual atoms at the surface of a crystal of silicon. The image was formed by exploiting a strange property of the electron, the fact that it can behave as if it is in several places at once: by quantum theory, an electron could be at any place defined by its wave equation.

In the scanning tunnelling microscope, a very

fine needle passes at a constant tiny distance over the surface of an object,

like the needle of a record player over the groove of a record, but not quite

touching it. The needle has a probability of ‘capturing’ electrons on the

surface, even though physics suggests that the electrons do not have enough

energy to escape. The electrons are imagined as tunnelling through a

potential energy barrier.

The greater the number of electrons there are at a position on the surface – at the outer boundaries of atoms – the more the electrons will ‘tunnel’. The variation in current they produce can be used to build up an image at the atomic level of detail. All this is done with just a small positive voltage on the needle.

The scanning tunnelling microscope has the following advantages over the electron microscope: it is very useful indeed for studying surfaces that would be damaged by high-voltage electron bombardment, and it does not require a vacuum to produce the images.

SUMMARY

As a result of studying the topics in all these articles on Imaging in Physics, you should:

- Understand the importance of images and imaging in modern life.

- Understand how lenses form images, and be able to distinguish between real and virtual images.

- Know how to find the position and size of images in single lenses and mirrors both by drawing and by calculation, using the lens formulae: 1/u + 1/v = 1/f and M = v/u.

- Understand the meaning of power in dioptres for a lens, as 1/f (in metres).

- Understand resolution and its calculation by the Rayleigh criterion: θ = λ/d

- Understand total internal reflection and the significance of the critical angle, given by n = 1/sin c.

- Understand how a range of optical devices work (magnifying glass, refracting and reflecting astronomical telescopes, compound microscope), including the eye, its possible defects and their correction.

- Be able to calculate the magnifying powers of telescopes and microscopes, using the formula m = f0/fe

- Understand the basic physical principles underlying some modern imaging systems such as CCDs, display screens, the ultrasound scanner, magnetic resonance imaging, the electron microscope and the scanning tunnelling microscope.

Till next time, I remain my humble self, @emperorhassy.

Thanks for reading.

REFERENCES

https://www.explainthatstuff.com/lcdtv.html

https://en.wikipedia.org/wiki/Liquid-crystal_display

https://www.fda.gov/radiation-emitting-products/medical-imaging/ultrasound-imaging

https://www.webmd.com/a-to-z-guides/what-is-a-mri

https://en.wikipedia.org/wiki/Magnetic_resonance_imaging

https://www.britannica.com/technology/transmission-electron-microscope

https://en.wikipedia.org/wiki/Transmission_electron_microscopy

https://www.nanoscience.com/techniques/scanning-tunneling-microscopy/

https://en.wikipedia.org/wiki/Scanning_tunneling_microscope

https://www.sciencedirect.com/topics/engineering/image-capturing

https://www.livescience.com/3919-human-eye-works.html

https://www.britannica.com/science/human-eye

https://en.wikipedia.org/wiki/Human_eye

https://courses.lumenlearning.com/boundless-physics/chapter/the-human-eye/

http://www.chm.bris.ac.uk/webprojects2002/upton/defects_of_the_eye.htm

https://en.wikipedia.org/wiki/Digital_camera

If you appreciate the work we are doing, then consider supporting our witness @stem.witness. Additional witness support to the curie witness would be appreciated as well.

For additional information please join us on the SteemSTEM discord and to get to know the rest of the community!

Thanks for having used the stem.openhive.network app. This granted you a stronger support from SteemSTEM. Note that including @steemstem in the list of beneficiaries of this post could have yielded an even more important support.