It has been quite some time since I put up a witness update log on Hive, as I did not have the time lately to post any Hive related updates. Anyway, here we are.

HF24

The main highlight of the post, and the highly anticipated blockchain fork on Hive. Let's review all the important updates.

Resumable node replays

Finally, something that I have always wanted as a node operator. It has been frustrating when you missed something in database.cfg or forgot to increase the open file limit for RocksDB in the middle of a replay.

This feature has already become extremely useful as I'm currently replaying Hive using the HF24 code on my Hackintosh to benchmark replay performance across different configurations and hardware (MIRA vs BMIC MMAP, SSD vs HDD etc) so that I can compare the performance and system resource usage.

Reduced memory usage

One of the biggest highlights for Hive to scale better, the memory requirements to run a Hive node has decreased, at least on non-MIRA nodes from what I have noticed.

According to several witnesses, a non-MIRA low memory consensus node will only require 16GB of RAM. The release notes on the Hive repository states that 16GB of RAM is sufficient for full API nodes, which I assume is for MIRA nodes as my full hived node has never exceeded 11GB of RAM usage.

This doesn't seem to hold true as my current non-MIRA (low memory) HF24 replay is currently at 24M blocks at time of typing this post, it is already consuming 10.6GB of RAM. Therefore I don't think 16GB of RAM is enough to replay all the way to head block (unless I misconfigured something).

I will be making a dedicated post on the benchmark result in a week once I have the numbers ready.

Faster replay

According to the official release notes:

A full replay currently takes 16-18 hours. Previously this took several days.

Not sure about the configuration here as it is unspecified, and I can't really compare non-MIRA replay on v0.23.0 as I have never done a non-MIRA replay pre-HF24, and will not be able to do so without taking down my full API node (for those who are new, HF23 and below requires 64GB of RAM for a low memory node).

I'm currently 12 hours into my non-MIRA HF24 replay as I'm typing this, and it's currently at 24M blocks.

30 day staking requirement

In HF24, all new Hive that is powered up through transfer_to_vesting operation will require a 30-day waiting period before it may be used for voting in governance and proposals.

What I don't know is if I start a powerdown right after I powered up, whether it will power down the newly powered-up tokens first, or the older Hive Power first. Haven't looked into the code changes yet (I'm a noob at C++), so if any dev knows please leave a comment below.

DHF changes

One of the features on Hive that sparked a lot of drama over the last few months, it has received a lot of welcoming (and not-so welcoming) changes.

The increased proposal creation fee to 10 HBD base + 1 HBD per day will definitely deter unnecessary long-running proposals, and the ability to change the details of proposals such as title and decreasing payout can be helpful if the proposer made a typo, but it is unspecified if proposal URL can be modified.

However, I think that the funds in the new @hive.fund account should be taken into account for debt ratio calculation, as these funds do exist in the circulating supply. Unless a burn proposal gets voted in, these funds will eventually get paid out to workers at some point regardless of the state of the return proposal.

More airdrops are finally coming!

If you were excluded from the airdrop by mistake or got voted above the airdrop proposal requirement, you will finally receive your airdrop on 16th of September, provided if nothing goes wrong!

Chain ID change, interesting versioning, rebranding

This section is more for developers in order to update their applications for the new chain ID and the rebranding.

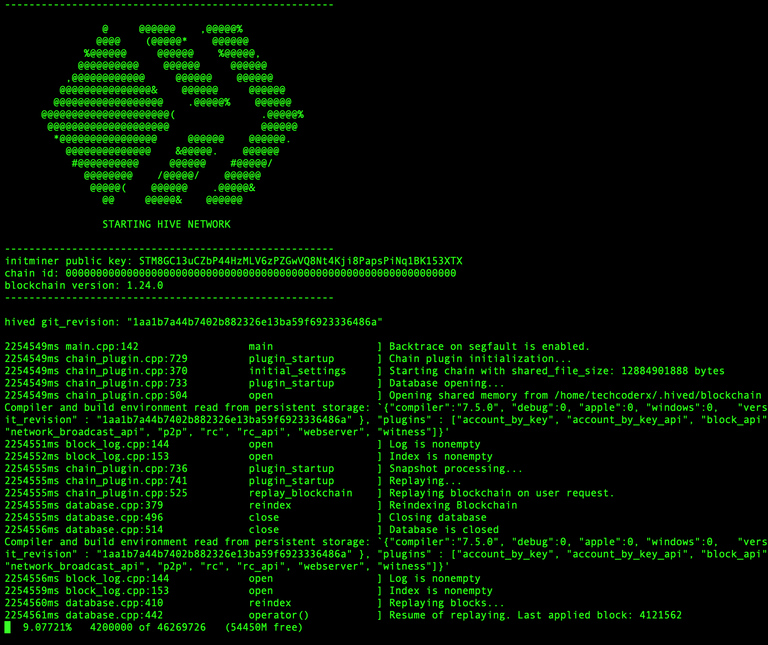

The new chain ID seems to be decided arbitrarily, as it resembles "Bee Abode", which basically describes "Hive" in a nutshell. Even the release notes contain a typo error between 0 and O. Just one character difference and you could be pointing to a completely different chain. This is the new Hive chain ID:

beeab0de00000000000000000000000000000000000000000000000000000000

If you can notice in the terminal output (or in the code or release tag), the blockchain version is now v1.24.0, which suggests a major version bump from v0 to v1.

Every Hive operation has been fully rebranded to use HIVE/HBD. I will be updating and testing OneLoveIPFS uploader and DTube's Hive related code for the relevant API changes.

Interesting that our Hive public keys are not rebranded (unless it is not mentioned in the release notes) to something that begins with HVE instead of STM.

New project

After failing one of my past Hive projects, I think it is vital as a witness to keep working on at least one.

Introducing Alive, a decentralized live streaming protocol that scales horizontally across multiple chains including Hive (due to potential high bandwidth/RC use).

Alive uses HLS as a medium of recording and delivering live streams, IPFS and Skynet as storage backends for actual stream chunk data and GunDB for housing the live chat and caching .ts stream chunks so that streamers do not need to have 5,000 HP to have enough RC to live stream on Hive.

I have made a demo 2 months ago on how this works on Avalon, which will be similar on Hive (replace specialized transactions with custom_json transactions, and there will be replayable databases to store those hashes which also provides the relevant APIs which can be used by any Hive applications).

I know that the Alive GitHub repos have not been active lately, but I hope to be able to resume development as soon as possible.

Witness performance

Let's see how well my witness performed lately :)

Current rank: 93rd (active rank 86th)

Votes: 3,755 MVests

Voter count: 110

Producer rewards (7 days): 23.28 SP

Producer rewards (30 days): 99.64 SP

Missed blocks: None!

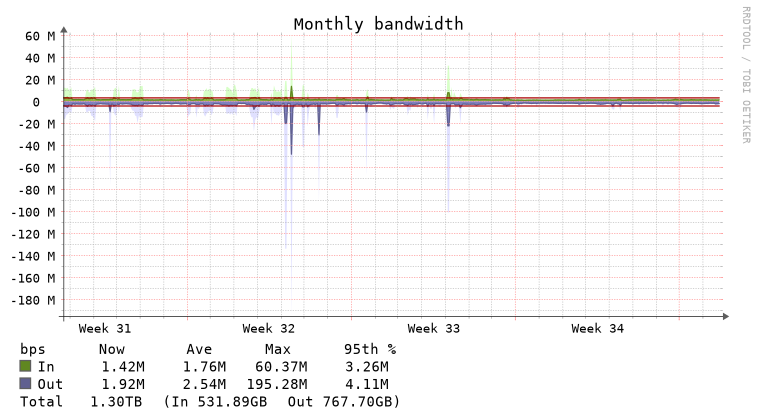

Server resource statistics

This section will be present in every witness update logs (if any of my nodes are online) to provide new witnesses up-to-date information about the system requirements for running a Hive node.

hived (v0.23.0)

block_log file size: 279 GB

blockchain folder size: 693 GB

Account history RocksDB size: 293 GB

RAM usage: 7.82 GB

Server network utilization (last 30 days)

Support

Currently subsidising server expenses myself for the first year. If you like what I'm doing, please consider supporting by voting for me.

Great update and summary of HF24.

Keep up the good work!

Wow using Skynet to livestream to Hive. Now, THAT is thinking outside of the box. Very cool summary! Reblogged

Actually, Alive is a fork from Skylive but instead of storing the hashes and Skylinks on a centralized server it gets stored on the blockchains instead.

Also it will be getting a brand new UI to post, start and end streams.

Wow that sounds great!

Are you whitelisting hosts for your portal? It took a bit of digging into the siac code but there is a hostcmddb function as I recall that you can specify private keys. It is cool because imo it allows more granularity of control to distribute the infrastructure globally.

The catch is, of course, making sure there are enough whitelisted hosts for redundancy / fault tolerance but I see potential to customize incentive models among other possibilities. In any case, very cool what you are doing and definitely going to keep an eye on this.

Is this going to be open source? If so, would be glad to star the repo and keep an eye out for opportunities to contribute.Disregard, realized the link is in your reply. 👍

Currently OneLoveIPFS is running an upload-only portal for subscribers (subsidized by me). I don't remember specifying any hosts for whitelisting on my Sia node.

I'm not too sure about what I will be running for Alive in production, as I have to consider the costs of running it on IPFS (cheaper, potentially fewer hosts) vs Skynet (more expensive, more hosts).

Best thing that can happen is that every streamer runs their own IPFS node/Skynet portal that they push their streams to, but I don't see that happening on day one.

I run a Sia host and been planning to run a Skynet web portal; however, I understand many do so at a loss. Would like to develop a reasonable cost model.

Not merely streaming which may be short term but long term data storage. I know there is a lot of power under the hood of nginx, load balancing, reverse caching capability etc.

Just haven't really had the time or motivation to play around with it. Just not yet 👍

Hope all well with you bro

Congratulations @techcoderx! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s) :

You can view your badges on your board And compare to others on the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!

Do not miss the last post from @hivebuzz: